Math AI: Maximum Likelihood Estimation (MLE) Evolve To EM Algorithm For Incomplete/Hidden Data To Variational Bayesian Inference

Major Reference

- [@poczosCllusteringEM2015]

- [@matasExpectationMaximization2018] good reference

- [@choyExpectationMaximization2017]

- [@tzikasVariationalApproximation2008] excellent introductory paper

Maximum Likelihood Estimation 和應用

Maximum likelihood estimation (MLE) 最大概似估計是一種估計模型參數的方法。適用時機在於手邊有模型,但是模型參數有無限多種,透過真實觀察到的樣本資訊,想辦法導出最有可能產生這些樣本結果的模型參數,也就是挑選使其概似性(Likelihood)最高的一組模型參數,這系列找參數的過程稱為最大概似估計法。

Bernoulli distribution:投擲硬幣正面的機率 , 反面的機率

. 連續投擲的正面/反面的次數分別是 H/T. Likelihood function 為

MLE 在無限個 中,找到一個使概似性最大的

, i.e.

只要 likelihood function 一次微分,可以得到

就是平均值,推導出來的模型參數符合直覺。

Normal distribution: 假設 mean unknown, variance known, 我們可以用 maximum log-likelihood function

$latex \begin{aligned}

&\frac{\mathrm{d}}{d \mu}\left(\sum_{i=1}^{n}-\frac{\left(x_{i}-\mu\right)^{2}}{2 \sigma^{2}}\right)=\sum_{\mathrm{i}=1}^{\mathrm{n}} \frac{\left(x_{i}-\hat{\mu}\right)}{\sigma^{2}}=\sum_{i=1}^{n} x_{i}-n \hat{\mathrm{u}}=0 \\

&\hat{\mu}=\overline{\mathrm{X}}=\frac{\sum_{i=1}^{n} x_{i}}{n}

\end{aligned} $

微分的結果告訴我們,樣本的平均值,其實就是母體平均值 最好的估計!又是一個相當符合直覺的答案,似乎 MLE 只是用來驗證直覺的工具。

這是一個錯覺,常見的 distribution (e.g. Bernoulli, normal distribution) 都是 exponential families. 可以證明 maximum log-likelihood functions of exponential families 都是 concave function, 沒有 local minimum. 非常容易用數值方法找到最佳解,而且大多有 analytical solution.

但只要 distribution function 更複雜一點,例如兩個 normal distribution weighted sum to 1, MLE 就非常難解。這是 EM algorithm 為什麼非常有用。

Maximum Likelihood Estimation (MLE) vs. EM

- EM seems to be a special case for MLE?

- Yes! If the problem can be formulated as MLE of incomplete/hidden data. Then EM algorithm 的 E-step is guessing incomplete/hidden data; M-step 就對應 MLE with some modification (見本文後段)。

- Sometimes MLE is very hard to solve because of too many parameters to estimation (mean, variance, initial distribution in GMM; or initial distribution, transition probability, emission probability in HMM); or missing data or missing label!!

- Too many parameters can be seen as incomplete/hidden data. see the following GMM example. It can be regards as missing label.

- In the above case, EM is a specialized MLE with iterative approach to solve the MLE.

- Yes. Specifically, the M step is MLE.

- EM ~~ iterative MLE (maybe local minimum)

- EM is more general than MLE because it can apply to parameters and missing data?

- The same thing, unknown parameters can be regarded as missing information/data. In the M step, we guess the missing data. In the E step, we use MLE to compute it, and so on.

Toy Example [@matasExpectationMaximization2018]

前提摘要

一個簡單例子觀察 temperature and amount of snow (溫度和雪量, both are binary input) 的 joint probability depending on two "scalar factors" and

as

注意 and

are parameters, 不是 conditional probability.

另外因為機率和為 1 做為一個 constraint:

問題一: MLE

一個 ski-center 觀察 天的溫度和雪量得到以下的統計,

, 如何估計

and

?

Likelihood function (就是 joint pdf of repeat experiments)

where 是 MLE 無關的常數

問題改成 maximum log-likelihood with constraint and

$latex \begin{gathered}

\frac{\partial L}{\partial a}=N_{00} \frac{1}{a}+N_{01} \frac{1}{a}+6 \lambda=0 \\

\frac{\partial L}{\partial b}=N_{10} \frac{1}{b}+N_{11} \frac{1}{b}+4 \lambda=0 \\

\frac{\partial L}{\partial \lambda}=6 a+4 b – 1 = 0

\end{gathered} $

上述方程式的解為

結果很直觀。其實就是利用大數法則:

再來大數法則 (a+5a)N~N00+N01; (3b+b)N~N10+N11 => a = .. ; b = …

問題二 incomplete/hidden Data

假設我們無法觀察到完整的"溫度和雪量“;而是“溫度或雪量”,有時“溫度”,有時“雪量”,但不是同時。對應的不是 joint pdf, 而是 marginal pdf 如下:

觀察如下:

The Lagrangian (log-likelihood with constraint)

此時的方程式比起之前複雜的多,不一定有 close-form solution:

$latex \begin{gathered}

\frac{\partial L}{\partial a}=\frac{T_{0}}{a}+\frac{S_{0}}{a+3 b}+\frac{5 S_{1}}{5 a+b}+6 \lambda=0 \\

\frac{\partial L}{\partial b}=\frac{T_{1}}{b}+\frac{3 S_{0}}{a+3 b}+\frac{S_{1}}{5 a+b}+4 \lambda=0 \\

6 a+4 b=1

\end{gathered} $

如果用大數法則:

注意不論 1. or 2. 都滿足constraint, 可以用來估計

and

.

問題是我們要用那一組? 單獨用一組都會損失一些 information, 應該要 combine 1 and 2 的 information, how?

思路一 平均 (a, b) from 1 and 2. 但這不是好的策略,因為平均 (a,b) 不一定滿足 constraint. 在這個 case 因為 linear constraint, 所以平均 (a,b) 仍然滿足 constraint. 但對於更複雜 constraint, 平均並非好的方法。

更重要的是平均並無法代表 maximum likelihood in the above equation. 我們的目標是 maximum likelihood, 平均 (a, b) 完全無法保證會得到更好的 likelihood value!

或者把 (a,b) from 1 or 2 代入上述 likelihood function 取大值。顯然這也不是最好的策略。因為一半的資訊被捨棄了。

思路二 比較好的方法是想辦法用迭代法解微分後的 Lagrange multiplier 聯立方程式。 (a, b) from 1. or 2. 只作為 initial solution, 想辦法從聯立方程式找出 iterative formula. 這似乎是對的方向,問題是 Lagrange multiplier optimization 是解聯立(level 1)微分方程式。不一定有 close form as in this example. 同時也無法保證收斂。另外如何找出 iterative formula 似乎是 case-by-case, 沒有一致的方式。

=> iterative solution is one of the key, but NOT on Lagrange multiplier (level 1)

思路三 既然是 missing data, 我們是否可以假設 fill missing data

update

update missing data

具體做法

有了 可以重新估計

using joint pdf

Q: 如何證明這個方法是最佳或是對應 complete data MLE or incomplete/hidden data MLE? 甚至會收斂?

EM algorithm 邏輯

前提摘要

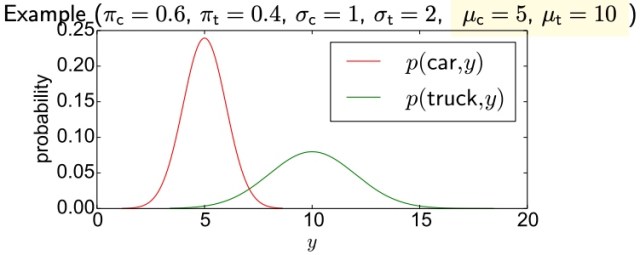

GMM 特例:Estimate Means of Two Gaussian Distributions (known variance and ratio; unknown means)

We measure lengths of vehicles. The observation space is two-dimensional, with capturing vehicle type (binary) and

capturing length (Gaussian).

{car, truck},

where

問題三 Complete Data (Easy case)

Log-likelihood

很容易用 MLE 估計

直觀上很容易理解。如果 observations 已經分組,求 mean 只要做 sample 的平均即可。

以這個例子,ratio 不論已知或未知,都不影響結果。

問題四 incomplete/hidden Data

$latex \mathcal{T}=\{\left(\operatorname{car}, y_{1}^{(c)}\right), \ldots,\left(\operatorname{car}, y_{C}^{(c)}\right),\left(\text {truck}, y_{1}^{(\mathrm{t})}\right), \ldots,\left(\text {truck}, y_{T}^{(\mathrm{t})}\right), \underbrace{\left(\bullet, y_{1}^{\bullet}\right), \ldots,\left(\bullet, y_{M}^{\bullet}\right)}_{\begin{array}{l}

\text { data with uknown } \\

\text { vehicle type }

\end{array}}\} $

Log-likelihood

$latex \ell(\mathcal{T})=\sum_{i=1}^{N} \ln p\left(x_{i}, y_{i} \mid \mu_{c}, \mu_{\mathrm{t}}\right)=\overbrace{C \ln \kappa_{\mathrm{c}}-\frac{1}{2} \sum_{i=1}^{C}\left(y_{i}^{(c)}-\mu_{\mathrm{c}}\right)^{2}+T \ln \kappa_{\mathrm{t}}-\frac{1}{8} \sum_{i=1}^{T}\left(y_{i}^{(\mathrm{t})}-\mu_{\mathrm{t}}\right)^{2}}^{\text {same term as before }} \\

+\sum_{i=1}^{M} \ln \left(\kappa_{\mathrm{c}} \exp \left\{-\frac{1}{2}\left(y_{i}^{\bullet}-\mu_{\mathrm{c}}\right)^{2}\right\}+\kappa_{\mathrm{t}} \exp \left\{-\frac{1}{8}\left(y_{i}^{\bullet}-\mu_{\mathrm{t}}\right)^{2}\right\}\right) $

不用微分也知道非常難解 MLE. 我們必須用另外的方法,就是 EM 算法。

不過我們還是微分一下,得到更多的 insights.

$latex \begin{aligned}

0=\frac{\partial \ell(\mathcal{T})}{\partial \mu_{\mathrm{c}}} &=\sum_{i=1}^{C}\left(y_{\mathrm{c}}^{(\mathrm{c})}-\mu_{\mathrm{c}}\right) \\

&+ \sum_{i=1}^{M} \overbrace{\frac{\kappa_{\mathrm{c}} \exp \left\{-\frac{1}{2}\left(y_{i}^{\bullet}-\mu_{\mathrm{c}}\right)^{2}\right\}}{\kappa_{\mathrm{c}} \exp \left\{-\frac{1}{2}\left(y_{i}^{\bullet}-\mu_{\mathrm{c}}\right)^{2}\right\}+\kappa_{\mathrm{t}} \exp \left\{-\frac{1}{8}\left(y_{i}^{\bullet}-\mu_{\mathrm{t}}\right)^{2}\right\}}}^{p\left(\operatorname{car} \mid y_{i}^{\bullet}, \mu_{\mathrm{c}}, \mu_{\mathrm{t}}\right)}\left(y_{i}^{\bullet}-\mu_{\mathrm{c}}\right)

\end{aligned} $

上兩式非常有物理意義。基本是 easy case 的延伸:已知分類的平均值,加上未知分類的機率平均值。一個簡單的方法是只取前面已知的部分平均,不過這不是最佳,因為丟失部分的資訊。

Missing Values, EM Approach

重新 summarize optimality conditions

如果 和

已知,上式非常容易解

and

。實際這是一個雞生蛋、蛋生雞的問題,因為這兩個機率又和

and

相關。

EM algorithm 剛好用來打破這個迴圈。

- Let

denote the missing data. Define

- 上述 optimality equations 可以得到

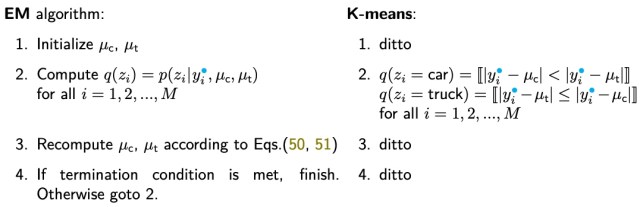

EM Algorithm 可以用以下四步驟表示

- Initialize

,

- Compute

for

- Recompute

,

according to the above equations.

- If termination condition is met, finish. Otherwise, goto 2.

上述步驟 2 稱為 Expectation (E) Step, 步驟 3 稱為 Maximization (M) Step. 統稱為 EM algorithm.

Q. Why Step 2 稱為 Expectation? not clear. Maximization 比較容易理解,因為 optimality condition 就是 maximization (微分為 0).

In summary, EM algorithm 的一個關鍵點是:讓 incomplete/hidden data 變成 complete (Expectation?). 有了完整的 data, 就容易用 MLE 找到 maximal likelihood estimation ( and

in this case).

Clustering: Soft Assignment Vs. Hard Assignment (K-means)

EM Algorithm Derivation

EM algorithm 如果只是 heuristic algorithm, 可能有用度減半。這裡討論數學上的 justification. 先定義 terminologies

: all observed values

: all unobserved values (i.e. hidden variable)

: model parameters to be estimated

目標:Find using the Maximum Likelihood approach

思路:假設解下列完整 data 很容易解 (例如上面的 exmple xx and xx)

我們的想法是把上上式改寫成上式,再加以優化

$latex \begin{aligned}

\ln p(\mathbf{o} \mid \boldsymbol{\theta}) &=\ln \sum_{\mathbf{z}} p(\mathbf{o}, \mathbf{z} \mid \boldsymbol{\theta}) \\

&=\ln \sum_{\mathbf{z}} q(\mathbf{z}) \frac{p(\mathbf{o}, \mathbf{z} \mid \boldsymbol{\theta})}{q(\mathbf{z})}

\end{aligned} $

這裡引入 distribution with

. 上式利用 Jensen's inequality 可以導出

Log-Likelihood with Hidden Variable Lower Bound

我們定義 的 lower bound

, for any distribution

.

這已經非常接近思路!

Maximizing by choosing

就可以 push the log likelihood

upwards.

反過來我們可以計算和 lower bound 之間的 gap.

$latex \begin{aligned}

\ln p(\mathbf{o} \mid \boldsymbol{\theta})-\mathcal{L}(q, \boldsymbol{\theta}) &=\ln p(\mathbf{o} \mid \boldsymbol{\theta})-\sum_{\mathbf{z}} q(\mathbf{z}) \ln \frac{p(\mathbf{o}, \mathbf{z} \mid \boldsymbol{\theta})}{q(\mathbf{z})} \\

&=\ln p(\mathbf{o} \mid \boldsymbol{\theta})-\sum_{\mathbf{z}} q(\mathbf{z})\{\ln \underbrace{p(\mathbf{o}, \mathbf{z} \mid \boldsymbol{\theta})}_{p(\mathbf{z} \mid \mathbf{o}, \boldsymbol{\theta}) p(\mathbf{o} \mid \boldsymbol{\theta})}-\ln q(\mathbf{z})\} \\

&=\ln p(\mathbf{o} \mid \boldsymbol{\theta})-\sum_{\mathbf{z}} q(\mathbf{z})\{\ln p(\mathbf{z} \mid \mathbf{o}, \boldsymbol{\theta})+\ln p(\mathbf{o} \mid \boldsymbol{\theta})-\ln q(\mathbf{z})\} \\

&=\ln p(\mathbf{o} \mid \boldsymbol{\theta})-\underbrace{\sum_{\mathbf{z}} q(\mathbf{z})}_{1} \ln p(\mathbf{o} \mid \boldsymbol{\theta})-\sum_{\mathbf{z}} q(\mathbf{z})\{\ln p(\mathbf{z} \mid \mathbf{o}, \boldsymbol{\theta})-\ln q(\mathbf{z})\} \\

&=-\sum_{\mathbf{z}} q(\mathbf{z}) \ln \frac{p(\mathbf{z} \mid \mathbf{o}, \boldsymbol{\theta})}{q(\mathbf{z})} \\

&= D_{\mathrm{KL}}(q(\mathbf{z}) \| p(\mathbf{z} \mid \mathbf{o}, \boldsymbol{\theta}) ) \ge 0

\end{aligned} $

這個 gap 就是 KL divergence between and

, 永遠大於 0.

如果能找到 ,就可以讓 gap 變成 0.

EM Algorithm Push the Lower Bound Upwards

Log likelihood function 可以分為兩個部分: lower bound + gap

從 Jensen's inequality 得到 是 lower bound. 同樣從 KL divergence

0 再度驗證。

如果 , the bound is tight.

Example 1: Hidden variable  does NOT provide any information of

does NOT provide any information of

假設 和

完全無關,

. We can make

such that , 也就是 gap = 0.

Lower bound 就變成原來的 log-likelihood function.

$latex \begin{aligned}

\mathcal{L}(q, \boldsymbol{\theta}) &= \sum_{\mathbf{z}} q(\mathbf{z}) \ln \frac{p(\mathbf{o}, \mathbf{z} \mid \boldsymbol{\theta})}{q(\mathbf{z})}\\

&= \sum_{\mathbf{z}} q(\mathbf{z}) \ln \frac{p(\mathbf{o} \mid \boldsymbol{\theta}) p(\mathbf{z} \mid \boldsymbol{\theta})}{q(\mathbf{z})} \\

&= \sum_{\mathbf{z}} q(\mathbf{z}) \ln p(\mathbf{o} \mid \boldsymbol{\theta})\\

&= \ln p(\mathbf{o} \mid \boldsymbol{\theta})

\end{aligned} $

Example 2:

$latex \begin{aligned}

\mathcal{L}(q, \boldsymbol{\theta}) &= \sum_{\mathbf{z}} q(\mathbf{z}) \ln \frac{p(\mathbf{o}, \mathbf{z} \mid \boldsymbol{\theta})}{q(\mathbf{z})}\\

&= \sum_{\mathbf{z}} q(\mathbf{z}) \ln \frac{p(\mathbf{z} \mid \mathbf{o}, \boldsymbol{\theta}) p(\mathbf{o} \mid \boldsymbol{\theta})}{q(\mathbf{z})} \\

&= \sum_{\mathbf{z}} q(\mathbf{z}) \ln p(\mathbf{o} \mid \boldsymbol{\theta})\\

&= \ln p(\mathbf{o} \mid \boldsymbol{\theta})

\end{aligned} $

What is the mutual information of and

in this case?

EM 具體步驟

Lower bound

Lower bound 其實可以視為 MLE of complete data,對應 EM algorithm 的 M step.

Gap 可以視為從 observables 推論出 unobservables, i.e. incomplete/hidden data, 對應 EM algorithm 的 E step.

Lower bound 包含兩個部分: (1) log-likelihood of complete data (both and

) MLE for

estimation; (2)

distribution.

這兩個部分剛好對應 EM algorithm 的 E-step and M-step.

- Initialize

- E-step (Expectation):

- M-step (Maximization):

M-step:  is fixed

is fixed

我們先看 M-step, 因為這和 MLE estimate 非常相似。

$latex \begin{aligned}

\mathcal{L}\left(q^{(t+1)}, \boldsymbol{\theta}\right) &=\sum_{\mathbf{z}} q^{(t+1)}(\mathbf{z}) \ln \frac{p(\mathbf{o}, \mathbf{z} \mid \boldsymbol{\theta})}{q^{(t+1)}(\mathbf{z})} \\

&=\sum_{\mathbf{z}} q^{(t+1)}(\mathbf{z}) \ln p(\mathbf{o}, \mathbf{z} \mid \boldsymbol{\theta})-\underbrace{\sum_{\mathbf{z}} q^{(t+1)}(\mathbf{z}) \ln q^{(t+1)}(\mathbf{z})}_{\text {const. }}

\end{aligned} $

注意 M-Step 和完整 data 的 MLE 思路如下非常接近,只加了對 的 weighted sum.

上式微分等於 0 就可以解 。上面問題四以及問題二就是很好的例子。

另一個常見的寫法

注意 M-Step 是 maximize lower bound, 並不等於 maximize 不完整 data 的 MLE,因為還差了一個 gap function (i.e. KL divergence), which is also dependent.

E-step:  is fixed

is fixed

以上 KL divergence 大於等於 0,所以 maximize lower bound 就要讓 要選擇 儘量縮小 gap (i.e. KL divergence) 到 0. 前面提到等於 0 的條件就是

同樣 E-Step 深具物理意義,就是猜 incomplete/hidden data distribution based on 已知的 observables 和 iterative .

例如問題四 E-Step 就是計算 for

Conditional Vs. Joint Distribution

我們可以把 conditional distribution 改成 joint distribution 如下。兩者都可以用來解 E-Step.

結合 E-Step and M-Step 的另一種寫法 (這是 EM 的精髓,假設可以有一個 close from Q function, e.g. GMM)

In summary: M-Step maximize the lower bound; E-Step close the gap

E-Step

M-Step

Define new E-Step (plug E-Step to M-Step)

$latex \begin{aligned}

Q(\theta^{t+1} | \theta^{t}) &= \sum_{\mathbf{z}} p(\mathbf{z} \mid \mathbf{o}, \boldsymbol{\theta}^{(t)}) \ln p(\mathbf{o}, \mathbf{z} \mid \boldsymbol{\theta}^{t+1}) \\

&= \int d \mathbf{z} \, p(\mathbf{z} \mid \mathbf{o}, \boldsymbol{\theta}^{(t)}) \ln p(\mathbf{o}, \mathbf{z} \mid \boldsymbol{\theta}^{t+1})

\end{aligned} $

new M Step

Free Energy Interpretation [@poczosCllusteringEM2015]

搞 machine learning 很多是物理學家 (e.g. Max Welling), 習慣用物理觀念套用於 machine learning. 常見的例子是 training 的 momentum method. 另一個是 energy/entropy loss function. 此處我們看的是類似 energy loss function.

我們從 gap 開始

$latex \begin{aligned}

\ln p(\mathbf{o} \mid \boldsymbol{\theta}) &= \mathcal{L}(q, \boldsymbol{\theta}) + D_{\mathrm{KL}}(q(\mathbf{z}) \| p(\mathbf{z} \mid \mathbf{o}, \boldsymbol{\theta}) ) \\

&= \sum_{\mathbf{z}} q(\mathbf{z}) \ln \frac{p(\mathbf{o}, \mathbf{z} \mid \boldsymbol{\theta})}{q(\mathbf{z})} + D_{\mathrm{KL}}(q(\mathbf{z}) \| p(\mathbf{z} \mid \mathbf{o}, \boldsymbol{\theta}) ) \\

&= \sum_{\mathbf{z}} q(\mathbf{z}) \ln p(\mathbf{o}, \mathbf{z} \mid \boldsymbol{\theta}) + \sum_{\mathbf{z}} -q(\mathbf{z}) \ln {q(\mathbf{z})}+ D_{\mathrm{KL}}(q(\mathbf{z}) \| p(\mathbf{z} \mid \mathbf{o}, \boldsymbol{\theta}) ) \\

&= E_{q(z)} \ln p(\mathbf{o}, \mathbf{z} \mid \boldsymbol{\theta}) + H(q) + D_{\mathrm{KL}}(q(\mathbf{z}) \| p(\mathbf{z} \mid \mathbf{o}, \boldsymbol{\theta}) ) \\

\end{aligned} $

where H(q) is the entropy of q, 第一項是負的,第二項和第三項是正的。

我們用一個例子來驗證

q = {0 or 1} with 50% chance, =>

H(q) = 1 (bit) or ln (?) > 0

Eq(z) ln p(o, z) = -(0.5 (o-u1)2 + 0.5 (o-u2)2 ) / sqrt(2pi) < 0

此處我們 switch to [@poczosCllusteringEM2015] notation.

- Observed data:

- Unobserved/hidden variable:

- Parameter:

- Goal:

重寫上式:

$latex \begin{aligned}

\ln p(D \mid \boldsymbol{\theta}^t) &= \sum_{\mathbf{z}} q(\mathbf{z}) \ln p(D, \mathbf{z} \mid \boldsymbol{\theta}^t) + \sum_{\mathbf{z}} -q(\mathbf{z}) \ln {q(\mathbf{z})}+ D_{\mathrm{KL}}(q(\mathbf{z}) \| p(\mathbf{z} \mid D, \boldsymbol{\theta}^t) ) \\

&= E_{q(z)} \ln p(D, \mathbf{z} \mid \boldsymbol{\theta}) + H(q) + D_{\mathrm{KL}}(q(\mathbf{z}) \| p(\mathbf{z} \mid D, \boldsymbol{\theta}) ) \\

&= F_{\theta^t} (q(\cdot), D) + D_{\mathrm{KL}}(q(\mathbf{z}) \| p(\mathbf{z} \mid D, \boldsymbol{\theta}) )

\end{aligned} $

稱為 free energy, 包含 joint distribution expectation 和 self-entropy. 或是稱為 ELBO (Evidence lower bound)

如果 is analytically available (e.g. GMM, this is just a softmax!). The ELBO becomes a Q(theta, thetaold) function + H(q)

The EM algorithm can be summzied as argmax Q!!

- E-step:

- M-step; argmax …

We can prove

- log likelihood is always increasing!

-

-

Use multiple, randomized initialization in practice.

Variational Expectation Maximization

EM algorithm 一個問題是對於複雜的問題沒有 close from p(z|x), then toast!

Appendix

問題二的 Conditional Vs. Joint Distribution 解法

我們之前的 E-Step 是猜 joint distribution, .

| a | 5a | |

| 3b | b |

如果用上述的 conditional distribution 可以細膩的看每一個 data.

-

對於所有

$latex q(t \mid s_0, a, b)=\left\{\begin{array}{l}

q\left(t_{0}\right)=p\left(t_{0} \mid s_{0}, a, b\right)=\frac{a}{a+3 b} \\

q\left(t_{1}\right)=p\left(t_{1} \mid s_{0}, a, b\right)=\frac{3 b}{a+3 b}

\end{array}\right. $ -

對於所有

$latex q(t \mid s_1, a, b)=\left\{\begin{array}{l}

q\left(t_{0}\right)=p\left(t_{0} \mid s_{1}, a, b\right)=\frac{5a}{5 a+ b} \\

q\left(t_{1}\right)=p\left(t_{1} \mid s_{1}, a, b\right)=\frac{b}{5 a+ b}

\end{array}\right. $ -

對於所有

$latex q(s \mid t_0, a, b)=\left\{\begin{array}{l}

q\left(s_{0}\right)=p\left(s_{0} \mid t_{0}, a, b\right)=\frac{1}{6} \\

q\left(s_{1}\right)=p\left(s_{1} \mid t_{0}, a, b\right)=\frac{5}{6}

\end{array}\right. $ -

對於所有

$latex q(s \mid t_1, a, b)=\left\{\begin{array}{l}

q\left(s_{0}\right)=p\left(s_{0} \mid t_{1}, a, b\right)=\frac{3}{4} \\

q\left(s_{1}\right)=p\left(s_{1} \mid t_{1}, a, b\right)=\frac{1}{4}

\end{array}\right. $

再來是問題二的 M-Step

最後再把所有 dataset 的 weighted sum 統計出來,例如

個

個

和

個

個

個

和

個

個

個

和

個

個

個

和

個

個數

個數

個數

個數

因此可以使用完整 data 的 MLE estimation:

To Do Next

Go through GMM example.

下一步 go through HMM model or simplest z -> o graph model.